The Technological Richter Scale

And some other reasons to rethink your IT department's approach to AI adoption

Most people are looking for an excuse not to grapple with the reality of AI. Nate Silver, the renowned U.S. sport and political forecaster, is not one of those people. His forecasting first made him famous after the system he developed in 2008 successfully predicted the outcomes in forty-nine of the fifty states in that year’s U.S. presidential election. His disruptive success has continued since.

For his new book On the Edge, Silver conducted about 200 interviews in “the River”, a community gathered around mastery of risk who appear to dominate much of modern life. The interviewees include substantial portions of the U.S. intellectual-executive class: Sam Altman, Paul Graham, Elon Musk, Doyle Brunson, Peter Thiel, Sam Bankman-Fried, Daniel Kahneman, Kathryn Sullivan, HR McMaster, and so on.

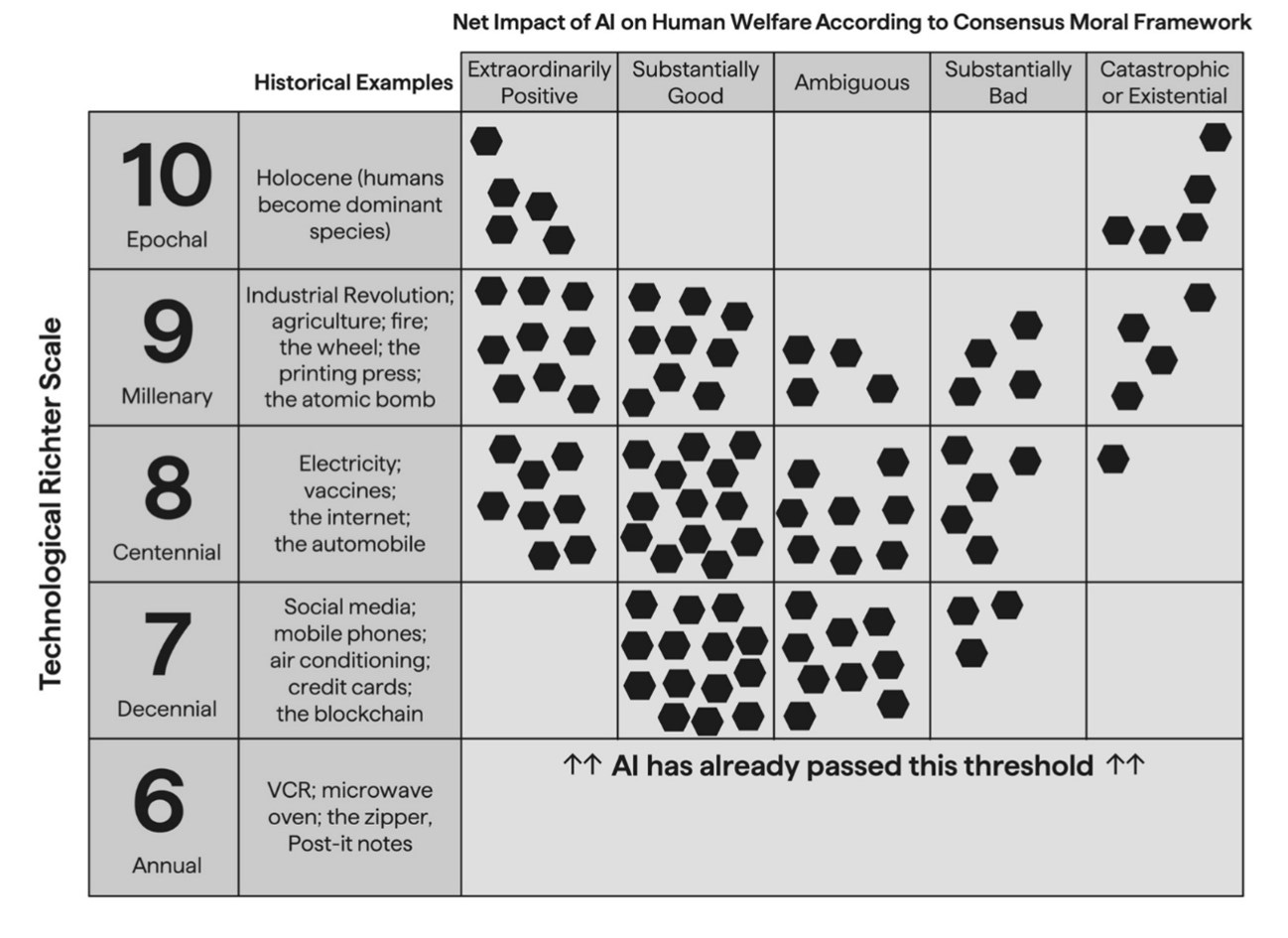

Near the end of the book, Silver discusses AI risk and introduces The Technological Richter Scale (TRS), a framework he uses to make rough predictions about AI’s potential impact on our future.

It’s not perfect, but it reveals much about Silver’s prediction model. The selection of innovations is subjective, and ongoing developments in AI are difficult to measure. One could reasonably argue that historical innovations have had millennia to demonstrate their long-term effects, making it hard to accurately place newer technologies like AI into the same context. Still, Silver’s track record in forecasting the future commands respect, and his chart elevates the current dialogue surrounding “p(doom)” to a higher level. As Silver himself notes: “The question [wasn’t] well defined.” He offers a re-definition:

In the graphic, I’ve sought to visualize [] with the one hundred [and one] hexagons … the uncharted possible AI futures. Don’t take their exact placement too literally, but they reflect my rough general view. Technological advancement has on balance had extremely positive effects on society, so the outcomes are biased in our favor. But the further we go up the Technological Richter Scale, the less of a reference class we have and the more we’re just guessing.

Looking at Silver’s predictions from another perspective, his model projects roughly a 40% chance that AI’s impact will be at a millennial or epochal level—on par with the taming of fire or the emergence of a new hominid species. There’s another 30% chance that AI will be as transformative as electricity, vaccines, the internet, or the automobile. The entire remaining probability holds that it will only be as impactful as social media or mobile phones.

The leaders of the major AI labs are not aiming to merely match Thomas Edison or Henry Ford. Recently the U.S. government revealed that Microsoft and OpenAI approached the state of North Dakoda to build $125 Billion AI chip clusters—designed to develop AI models orders-of-magnitude larger than GPT-4. Silver’s interview with Sam Altman, the CEO of OpenAI, captures the ambition behind these moves:

Altman also has his sights set on the [TRS chart] 9s and above. He told me that AI could end poverty, that its impact would be “far greater” than that of the computer, and that it would “increase the rate of scientific discovery... to a rate that is sort of hard to imagine.”

The large uncertainty in Silver’s chart is mostly a result of his favourite complaint: not enough historical reference points. But there are also various reasonable technical arguments against AI having a transformative impact: current AI may be nearing the top of its capability curve, advanced AI may require more computing power than we can even theoretically make, or even advanced AI may be limited by the data it’s trained on—only able to creatively recombine existing types of information without handling wildly novel input or output. While coherent, these arguments currently appear to be engineering constraints to be challenged, rather than unbridgeable barriers.

(Indeed, the technical challenges for AI today seem closely analogous to yesterday's engineering problems with another general-purpose technology: self-driving cars—problems which appear to have only recently been worked out. Waymo driverless cars have just reached a 5%-ish or more market share in San Francisco, and appear destined now to climb higher and higher.)

With gratitude to

’s “34 arguments against transformational AI”, three non-technical sets of objections to AI’s potential impact need to be dispelled. The first involve fuzzy adjectives: “Look how stupid current AIs are; they can’t even solve <some simple thing>.” These are objections based on misconceptions about what’s important about the claim that AI will be transformative. The ambiguity of adjectives like intelligent, reasoning, creative, goal-oriented, or reliable are not important, if AI displays genuine impactful capabilities.The second set of objections are rooted in human exceptionalism. We are exceptional. However, “intelligence” is neither limited nor irrelevant without human traits; nor inherently inferior to human uniqueness. “Intelligence” doesn’t require human sentience, consciousness, empathy, love, or any other human-ness to have a transformative impact on society.

Third are objections based on reflexive errors: anything overhyped must be fake, it can only make copies of its training data, it’s only math and math doesn’t do anything, it’s a tool and tools are harmless, science fiction or religion shows that it can or can’t be impactful, it’s ill-defined and so it can’t be real, and any other dismissive attitudes just rejecting the entire premise of AI impact. Zvi writes:

Even when people freak out a little, they have the shortest of memories. All it took was 18 months of no revolutionary advances or catastrophic events, and many people are ready to go back to acting like nothing has changed or ever will change, and there will never be anything to worry about.

So What?

The point to be made to you, directly, is that this is a larger lift than simply treating the adoption of AI as an IT issue. The IT department has some smart folks, but this is not merely a tech upgrade. This is about all processes used by all people. Remember, the minimum scale of change is cell phones and social media—two innovations with distinctly ambiguously outcomes.

The impact of AI is both enormous and uncertain. Silver’s chart gives us a 21% chance of that ambiguous outcome on par with social media, and a 22% collective chance of substantially bad, catastrophic, or existential outcomes. This ‘downside’ is a blind spot for some AI leaders:

This is where Altman’s glass-half-full view of technology is most obvious. Once we get into the 9s and up, we have few recent precedents to draw from, and the ones we have (like nuclear weapons) aren’t entirely reassuring. Under such a scenario, we can say that AI’s impact would probably either be extraordinary or catastrophic—but it’s hard to know which.

Silver dedicates significant thought to whether Altman, like Sam Bankman-Fried, might be willing to take hellish risks for a slightly better chance of heavenly rewards:

“There is this massive risk, but there’s also this massive, massive upside,” said Altman when I spoke with him in August 2022. “It’s gonna happen. The upsides are far too great … the upsides are so great, we can’t not do it.”

Silver concludes that while the consensus in Silicon Valley is that Altman isn’t as reckless as Bankman-Fried, both figures are part of a culture “irresistibly drawn toward the path of risk.” And so is Nate Silver.

What Now?

At a minimum, integrating AI into your work and life is an all-hands-on-deck matter. You owe it to the future to open your mind, guide this technology in a positive direction, and push back against those who deny the reality of its (variable but) guaranteed impact.

Some people will focus on the potential epochal transformations, but most of us need to just figure out how to use this general-purpose technology to remove even more tedious work without destroying the meaning of jobs, consider AI’s role in professional and other kinds of education, use it well without offloading our thinking to machines, and mitigate risks with thoughtful policies, approaches, and solutions in narrow industries and fields—such as the law.

AI is broader than the LLMs making headlines today, and the technology we’re discussing here might not be the ChatGPT version available in September 2024. So, consider this an evolving guide for professionals, whether you’re working within a firm or another organization:

Get personal access to paid LLMs now: Start by getting comfortable with access to LLMs (ChatGPT, Gemini, or Claude) and use them in your work and your personal life. Training is not required. Don’t expect everything to click immediately—each interaction builds your comfort with the tool. Then suddenly it will be indispensable. Be unreasonably ambitious. Be cautious of AI apps that wrap the LLMs in expensive packages without adding real value.

Share your AI experiences internally: Schedule regular discussions about how you’re adopting and integrating AI. AI is most effective when users are knowledgeable, and it’s expected that different people will find different ways to use it. Share what works for you and learn from others’ successes or challenges without expecting to emulate everything.

Collective encouragement and de-risking: As an organization, encourage open discussions about AI use without imposing strict rules, except the necessary warnings around data and privacy. Ensure no one fears for their jobs. The focus should be on de-risking the sharing process and letting experience build openly and freely.

Identify applications in law: Learn how the relevant laws apply. Most AI risks are covered by existing laws. Learn the new laws entering effectiveness to deal with the truly unique AI-related issues.

Identify commercial AI concerns: Identify AI safety concerns in agreements with service partners, clients, and suppliers. We don’t yet know how almost any of these issues will shake out, so step cautiously.

Develop protocols to offer as services: Take what you’ve learned and develop it into formal protocols that you can offer to clients and other organizations. These protocols should reflect your real-world experience and understanding of AI’s benefits and risks.

Join societal AI governance discussions: Once you’ve gained practical experience, use the experience to engage in broader conversations about how AI and AI developers should be governed by society.

Engage in (legal) AI ethics discussions: Similarly, after acquiring practical experience, join discussions about the implications of AI in your narrow field and industry.