Megan Garcia is a 40-year-old attorney and mother of three boys. Ms. Garcia is known for filing a wrongful death lawsuit against Character.AI following the suicide of her 14-year-old son, Sewell, in February 2024. The lawsuit alleges that interactions with a chatbot on the platform contributed to her son's death.

Pliny, known online as @elder_plinius, is an AI red teamer, prompt engineer, and agent operator.

The Future of Life Institute (FLI) is a non-profit focused on reducing global catastrophic risks, particularly those posed by advanced technologies like AI.

There is a lot of AI news to invest time in these days and, to be honest, were Ms. Garcia not an attorney, I wouldn’t have invested the time to listen to her story. I’m very glad that I was tilted toward empathy in this case. Her account is much more compelling and focused on the actions of the AI than I had expected; and tragic, which I had expected. I recommend listening to Ms. Garcia’s full interview with Kara Swisher.

Pliny specializes in exploring and exposing the vulnerabilities of large language models (LLMs) through techniques like prompt injections and jailbreaks. His work aims to enhance AI safety by identifying and addressing potential weaknesses in AI systems. Here are two perspectives on Pliny’s work that seem to correctly delineate his work:

Eliezer Yudkowsky: If you've got a guy making up his own security scheme and saying "Looks solid to me!", and another guy finding the flaws in that scheme and breaking it, the second guy is the only security researcher in the room. In AI security, Pliny is the second guy. Matt Vogel: dude just shreds safeguards ai labs [OpenAI, Anthropic, Google] put on their models. a new [AI] model drops, then like a day later, [Pliny] has it telling how you make meth and find hookers.

Yes, today, a good AI security researcher can very easily get ChatGPT to help him “make meth and find hookers”. Think a little bit about what kids can learn to do with new technologies.

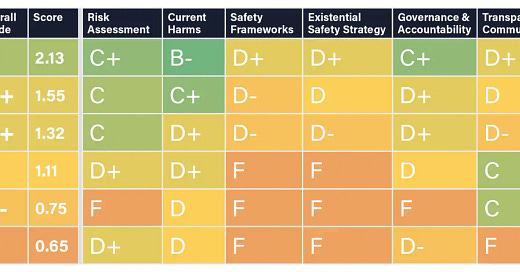

FLI came out with its annual safety and governance scorecard last week. The scorecard evaluates the practices of leading AI companies, highlighting their efforts to manage risks posed by advanced technologies. Here’s the takeaway summary chart:

While the grades may be subject to argument, the trend is not. There are significant failures of risk management among AI companies, with some lacking even basic safety measures. All flagship AI models are significantly vulnerable to adversarial attacks (e.g., Pliny’s "jailbreaks"). Oversight is lacking and internal profit motives demonstrably compromise internal safety. Companies pursuing AI that can think and act autonomously lack adequate strategies to ensure these systems remain safe and under control, even as the thinking and acting gets increasingly powerful.

We need to take a deep breath and note that these three things co-existing now: comprehensively poor risk assessments across the AI development industry, red hat security experts notoriously able to easily compromise AI models, and grieving mothers.

This is all to re-emphasize a chart that I drew up a short while ago, which is continually relevant but probably is clearer if done over in bold marker. The “Z-curve” of Autonomy and Reliability:

When ChatGPT 4 was launched in 2023, it made instantaneous hilarious poems about serious topics in rhyming pirate voices; sometimes it was brilliant, and it didn’t matter when the poems were bad. The state of the art in assessing AI Risk had to adapt fast, and all we have from the past years is various versions of “yes, this is how it breaks”: Pliny’s version and Ms. Garcia’s version.

AI is becoming more and more capable of having long, useful, and meaningful interactions in and with the real world. AI Risk is less and less a joke. The AI industry is not averse to being counselled wisely but it’s not their role to curb their own ambitions. The legal industry needs to step up our advice to the AI industry on framing and assessing the risks inherent of making artificial intelligence. At the end of 2024, this urgently needs to be a large focus of my work in coming year. Let me know if you feel the same way.